the following article analyzes interesting points about azure functions

📑 Table of Contents

- 💰 Q. What plans are available?

- 🎯 Q. What triggers are available?

- 📊 Q. How does autoscale work in different execution plans?

- 📄 Q. Role and structure of ‘function.json’, ‘hosts.json’ and ‘local.settings.json’ files?

- 🔄 Q. What are Durable Functions?

- ⚠️ Q. What are Limitations of Azure Functions? - See detailed article

- 📝 Additional Information

- 📚 References

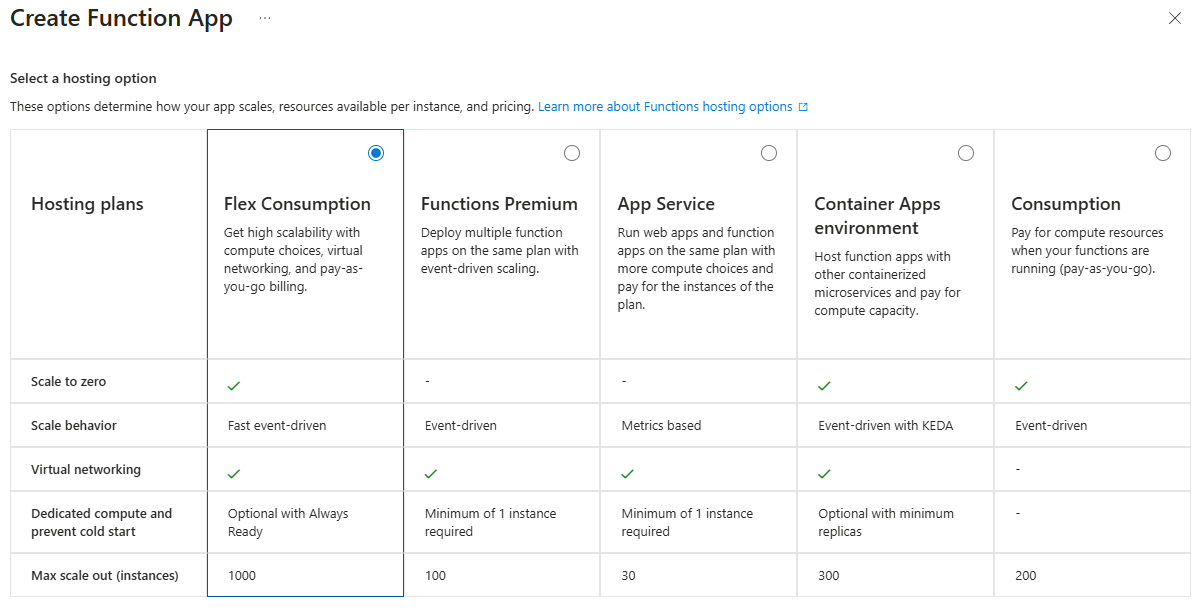

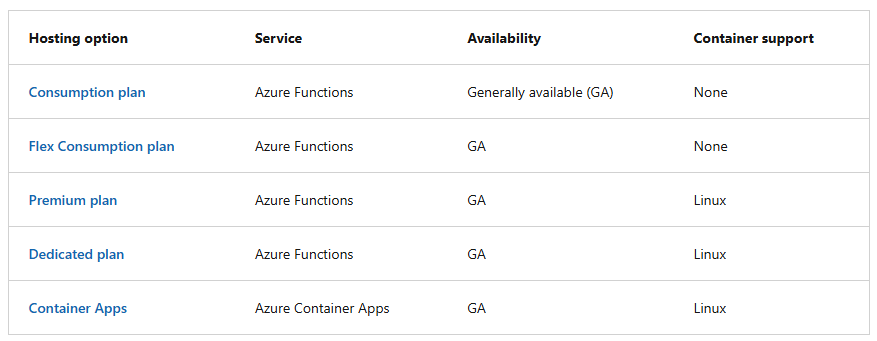

💰 Q. What plans are available ?

- Consumption plan: Pay-as-you-go serverless hosting where instances are dynamically added/removed based on incoming events. You’re charged only for compute resources when functions are running (executions, execution time, and memory used).

- Pros: Lowest cost for sporadic workloads, automatic scaling, includes free monthly grant, no infrastructure management

- Cons: Cold starts when scaling from zero, 10-minute execution timeout, no VNet integration, max 200 instances (Windows) or 100 (Linux), Linux support retiring September 2028

- Flex Consumption plan: Next-generation serverless plan with improved performance and flexibility. Offers faster scaling with per-function scaling logic, reduced cold starts on preprovisioned instances, and VNet support while maintaining pay-for-execution billing model.

- Pros: Faster cold starts, VNet integration, per-function scaling (up to 1000 instances), configurable instance memory (512MB-4GB), always-ready instances option, better concurrency handling

- Cons: More complex configuration than basic Consumption, higher base cost when using always-ready instances, newer plan with some limitations (no deployment slots, limited regions), Linux only.

- Premium plan: Elastic Premium plan providing always-ready instances with prewarmed instances buffer to eliminate cold starts. Scales dynamically like Consumption but with guaranteed compute resources and enterprise features.

- Pros: No cold starts (always-ready + prewarmed instances), VNet connectivity, unlimited execution duration, Linux container support, predictable performance, up to 100 instances

- Cons: Higher cost (billed on core-seconds/memory even when idle), minimum of 1 instance always running, more complex pricing model than consumption plans

- Dedicated plan: Runs on dedicated App Service Plan VMs with other App Service resources. You have full control over the compute resources and scaling behavior.

Pros: Predictable costs, use excess App Service capacity, custom images support, full control over scaling, can run in isolated App Service Environment, no cold starts with Always On

Cons: Manual scaling or slower autoscale due to provisioning time for dedicated resources, most expensive option for low-traffic apps, requires Always On setting, no automatic event-driven scaling, 10-30 max instances (100 in ASE)

Container Apps: Deploy Azure Functions as containers in a fully managed Container Apps environment. Combines Functions event-driven model with Kubernetes-based orchestration, KEDA scaling, and advanced container features.

Pros: Custom containers with dependencies, scale to 1000 instances, VNet integration, GPU support for AI workloads, Dapr integration, unified environment with microservices, multi-revision support

Cons: More complex setup, cold starts when scaling to zero, no deployment slots, no Functions access keys via portal, requires storage account per revision for multi-revision scenarios, Linux only

🎯 Q. What triggers are available ?

Timer Trigger: Executes functions on a predefined schedule using CRON expressions, enabling time-based automation for recurring tasks like batch processing, cleanup jobs, or periodic data synchronization.

Pros:

- Simple configuration with standard CRON syntax

- No external dependencies or services required

- Predictable execution patterns for planning and monitoring

- Works across all hosting plans

Cons:

- No built-in retry or error handling for missed executions

- Can waste compute resources on empty runs

- Singleton execution model may cause delays in high-frequency scenarios (executions are guaranteed from overlapping)

Azure Storage Queues and Blobs Trigger: Automatically responds to new messages in Azure Storage Queues or detects new/modified blobs in Storage containers, enabling scalable asynchronous processing of stored data and files.

Pros:

- Cost-effective for simple queuing and file processing scenarios

- Built-in poison message handling for failed processing

- Automatic scaling based on queue depth

- Simple integration with other Azure Storage operations

Cons:

- Limited message size (64KB for queues, no message batching)

- Polling-based detection introduces latency (blob trigger can delay 10+ minutes on Consumption plan)

- No advanced messaging features (sessions, transactions, dead-lettering)

- Blob trigger can miss rapid changes or process same blob multiple times

Azure Service Bus Queues and Topics Trigger: Listens to Azure Service Bus queues (point-to-point) or topics (pub-sub) to process enterprise-grade messages with advanced delivery guarantees, sessions, and transactional support.

Pros:

- Rich messaging features: sessions, transactions, duplicate detection, dead-letter queues

- Large message support (up to 100MB with Premium tier)

- Topics enable pub-sub patterns with multiple subscribers

- Strong delivery guarantees and advanced error handling

Cons:

- Higher cost compared to Storage Queues

- More complex configuration and setup

- Requires separate Service Bus namespace provisioning

- Can be overkill for simple queue scenarios

Azure Cosmos DB Trigger: Uses Cosmos DB Change Feed to detect and respond to inserts and updates in Cosmos DB containers, enabling real-time reactive processing of database changes.

Pros:

- Near real-time detection of data changes (sub-second latency)

- Processes changes in order within a partition

- Scalable across multiple function instances automatically

- Supports all Cosmos DB APIs (SQL, MongoDB, Cassandra, etc.)

Cons:

- Only detects inserts and updates (not deletes)

- Requires additional lease container for coordination (extra cost)

- Can be complex to handle schema changes

- Change feed consumption adds RU costs

Azure Event Hubs Trigger: Processes high-throughput streaming data from Event Hubs, enabling real-time analytics and telemetry ingestion for IoT devices, application logs, and event streaming scenarios.

Pros:

- Massive scale (millions of events per second)

- Built-in partitioning for parallel processing

- Event retention allows replay and reprocessing

- Checkpointing ensures reliable event processing

Cons:

- Higher cost for throughput units or processing units

- Complex partition management and scaling considerations

- Requires storage account for checkpointing (additional cost)

- Overkill for low-volume scenarios

HTTP/Webhook Trigger: Exposes functions as HTTP endpoints or webhooks, allowing synchronous request-response patterns for REST APIs, integrations with external services (like GitHub), and user-facing applications.

Pros:

- Flexible for building REST APIs and webhooks

- Supports all HTTP methods and custom routing

- Authorization via function keys, Azure AD, or anonymous

- Direct synchronous communication pattern

Cons:

- Subject to timeout limits (230 seconds for HTTP trigger on Consumption)

- Synchronous nature doesn’t scale as well as queue-based patterns

- Requires external load balancing for high availability

- Cold starts impact response time on serverless plans

Azure Event Grid Trigger: Subscribes to Event Grid topics to receive and react to discrete events from Azure services (like Blob created, VM state changed) or custom applications, enabling event-driven architectures at scale.

Pros:

- Push-based delivery with low latency (no polling overhead)

- Built-in retry logic with exponential backoff

- Native integration with 100+ Azure services

- Advanced filtering and routing capabilities

Cons:

- Event ordering not guaranteed

- Requires webhook endpoint validation

- 1MB event size limit

- Can duplicate events (at-least-once delivery)

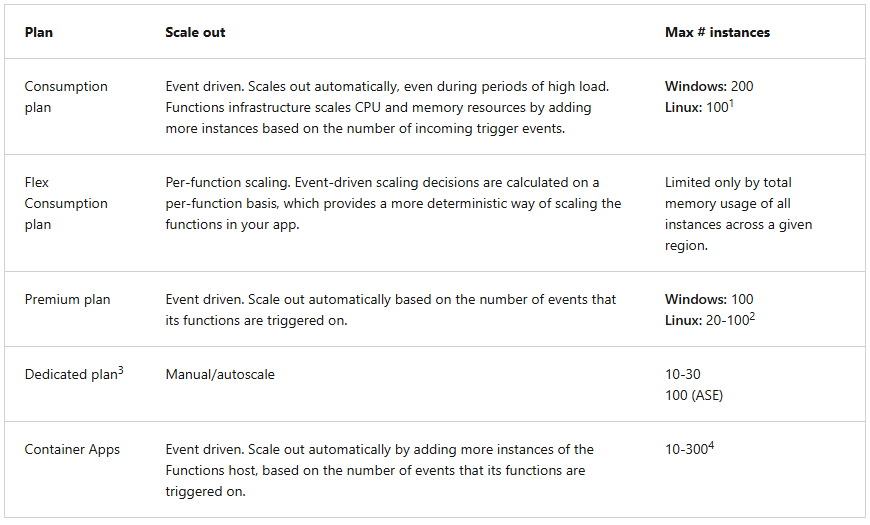

📊 Q. How does autoscale work in different execution plans ?

Azure Functions uses different scaling mechanisms depending on the hosting plan. Understanding these differences is crucial for choosing the right plan for your workload.

Consumption Plan - Event-Driven Scaling:

The Azure Functions scale controller continuously monitors the rate of incoming events for each trigger type

Makes scaling decisions every few seconds based on queue depth, message age, and other trigger-specific metrics

Automatically adds or removes instances dynamically without user intervention

Scaling behavior:

- Scale-out: Instances added automatically when event load increases

- Scale-in: Instances removed when load decreases, with graceful shutdown (up to 10 minutes for running functions, default is 5 minutes)

- Scale-to-zero: When no events are present, scales down to zero instances (cold start on next event)

- Max instances: 200 (Windows) or 100 (Linux)

Scaling speed: Fast - typically scales out in seconds to minutes

Flex Consumption Plan - Per-Function Event-Driven Scaling:

Uses per-function scaling logic where each function type can scale independently

HTTP triggers, Blob (Event Grid) triggers, and Durable Functions share instances as groups

All other trigger types (Queue, Service Bus, Cosmos DB, Event Hubs, etc.) scale on dedicated instances

Always-ready instances option available to eliminate cold starts

Scaling behavior:

- Scale-out: Faster than Consumption plan with more aggressive scaling logic

- Scale-in: Graceful shutdown with up to 60 minutes for running functions

- Scale-to-zero: Optional (can configure always-ready instances)

- Max instances: 1000 per function app

Scaling speed: Fastest - optimized scaling with pre-provisioned capacity

Premium Plan (Elastic Premium) - Event-Driven with Always-Ready Instances:

Uses the same event-driven scale controller as Consumption plan

Maintains always-ready instances (minimum 1) that never scale to zero

Pre-warmed instances act as a buffer for immediate scale-out

Scales additional instances dynamically based on event load

Scaling behavior:

- Scale-out: Immediate (pre-warmed instances become active, new instances added if needed)

- Scale-in: Graceful shutdown with up to 60 minutes for running functions

- Scale-to-zero: Never - always maintains minimum instance count

- Max instances: 100 (Windows) or 20-100 (Linux)

Scaling speed: Very fast - no cold starts due to pre-warmed instances

Special features:

- VNet triggers with dynamic scale monitoring: Can enable runtime scale monitoring for triggers like Service Bus, Event Hubs, and Cosmos DB when behind VNet

- Better for scenarios requiring predictable performance with event-driven scaling

Dedicated Plan (App Service Plan) - Manual/Rule-Based Autoscale:

Uses Azure Monitor Autoscale with metric-based rules (CPU, memory, custom metrics, schedules)

Scaling decisions based on resource utilization thresholds, not event queue depth

Requires manual configuration of autoscale rules or manual scaling

Scaling behavior:

- Scale-out: Reactive, triggered when metrics exceed thresholds (e.g., CPU > 70%)

- Scale-in: Triggered when metrics fall below thresholds (e.g., CPU < 30%)

- Scale-to-zero: Not supported - requires “Always On” setting to keep functions active

- Max instances: 10-30 (regular) or 100 (App Service Environment)

Scaling speed: Slower - takes minutes to provision new VMs and start app instances

Why it’s slower:

- Must provision full VM instances (not just function host instances)

- Reactive scaling based on metrics (by the time threshold is hit, load may have peaked)

- Uses generic App Service autoscale, not optimized for event-driven workloads

- No built-in understanding of event queue depth

Configuration required:

- Define autoscale rules in Azure Monitor

- Set metric thresholds and scale-out/scale-in conditions

- Configure cooldown periods between scaling operations

Container Apps - Event-Driven with KEDA: Uses KEDA (Kubernetes Event-Driven Autoscaling) for event-driven scaling

- Leverages Kubernetes orchestration for container lifecycle management

- Scale decisions based on event sources and custom scalers

Scaling behavior:

- Scale-out: Event-driven, similar to Premium plan

- Scale-in: Graceful shutdown for running functions

- Scale-to-zero: Supported (cold starts when scaling from zero)

- Max instances: 300-1000 depending on configuration

Scaling speed: Fast - event-driven scaling optimized for containerized workloads

Comparison Summary

The table below summarizes the key differences:

| Plan | Scaling Type | Speed | Scale to Zero | Max Instances | Cold Starts |

|---|---|---|---|---|---|

| Consumption | Event-driven | Fast | ✅ Yes | 200 (Win) / 100 (Linux) | Yes |

| Flex Consumption | Per-function event-driven | Fastest | ✅ Optional | 1000 | Optional (with always-ready) |

| Premium | Event-driven + always-ready | Very Fast | ❌ No (min 1) | 100 | No |

| Dedicated | Manual/Rule-based | Slower | ❌ No | 10-30 (100 ASE) | No (with Always On) |

| Container Apps | Event-driven (KEDA) | Fast | ✅ Yes | 300-1000 | Yes |

Key Takeaways:

- Event-driven plans (Consumption, Flex, Premium, Container Apps) monitor event sources and scale proactively

- Dedicated plan uses traditional autoscale based on resource metrics and reacts to load

- Premium and Flex offer the best balance of performance (no/minimal cold starts) and event-driven scaling

- Dedicated plan is best for predictable, steady workloads where you control scaling behavior

📄 Q. Role and structure of ‘function.json’, ‘hosts.json’ and ‘local.settings.json’ files?

Azure Functions uses several configuration files to define function behavior, runtime configuration and application settings.

Understanding these files is essential for developing and deploying functions effectively.

| File | Scope | Deployed to Azure | Purpose |

|---|---|---|---|

function.json |

Per-function | ✅ Yes | Define trigger and bindings for individual function |

host.json |

Function app | ✅ Yes | Global runtime and extension configuration |

local.settings.json |

Function app | ❌ No | Local development settings and secrets |

function.json (Function-Level Configuration): Defines the trigger, bindings, and configuration for an individual function.

Each function has its ownfunction.jsonfile in its directory.Location:

<FunctionApp>/<FunctionName>/function.json

When Used:- Required for scripting languages (JavaScript, Python, PowerShell, Java)

- Not used for C# in-process functions (attributes used instead)

- Generated automatically for C# isolated worker process functions

Structure:

json { "bindings": [ { "type": "queueTrigger", "direction": "in", "name": "myQueueItem", "queueName": "myqueue-items", "connection": "AzureWebJobsStorage" }, { "type": "blob", "direction": "out", "name": "outputBlob", "path": "output/{rand-guid}.txt", "connection": "AzureWebJobsStorage" } ], "disabled": false, "scriptFile": "../dist/index.js", "entryPoint": "processQueueMessage" }Key Properties:

Property Description Example Values bindingsArray of trigger and bindings See binding types below disabledWhether function is disabled true,falsescriptFilePath to the function code file "index.js","__init__.py"entryPointFunction entry point name "run","main"Binding Properties:

Property Description Required typeBinding type (queueTrigger, blob, http, etc.) ✅ Yes directionData flow: in,out, orinout✅ Yes nameVariable name in function code ✅ Yes connectionApp setting name for connection string Depends on binding Additional properties Trigger/binding-specific settings Varies Common Binding Types:

- Triggers:

httpTrigger,queueTrigger,blobTrigger,timerTrigger,serviceBusTrigger,eventHubTrigger,cosmosDBTrigger,eventGridTrigger - Bindings:

http,queue,blob,table,cosmosDB,serviceBus,eventHub

host.json - Function App-Level Configuration: Contains global configuration settings that affect all functions in the function app, including runtime behavior, logging, and extension settings.

Location:<FunctionAppRoot>/host.json

Versions: Configuration schema varies by Functions runtime version (v1.x, v2.x, v3.x, v4.x)

Structure (Functions v4.x):json { "version": "2.0", "logging": { "logLevel": { "default": "Information", "Function": "Information", "Host.Aggregator": "Information" }, "applicationInsights": { "samplingSettings": { "isEnabled": true, "maxTelemetryItemsPerSecond": 20, "excludedTypes": "Request;Exception" } } }, "functionTimeout": "00:10:00", "extensions": { "http": { "routePrefix": "api", "maxConcurrentRequests": 100, "maxOutstandingRequests": 200 }, "queues": { "maxPollingInterval": "00:00:02", "visibilityTimeout": "00:00:30", "batchSize": 16, "maxDequeueCount": 5, "newBatchThreshold": 8 }, "serviceBus": { "prefetchCount": 0, "messageHandlerOptions": { "autoComplete": true, "maxConcurrentCalls": 16, "maxAutoRenewDuration": "00:05:00" } }, "eventHubs": { "maxEventBatchSize": 10, "prefetchCount": 300, "batchCheckpointFrequency": 1 }, "durableTask": { "hubName": "MyTaskHub", "storageProvider": { "type": "azure_storage", "connectionStringName": "AzureWebJobsStorage" }, "maxConcurrentActivityFunctions": 10, "maxConcurrentOrchestratorFunctions": 5, "extendedSessionsEnabled": true, "extendedSessionIdleTimeoutInSeconds": 300 } }, "extensionBundle": { "id": "Microsoft.Azure.Functions.ExtensionBundle", "version": "[4.*, 5.0.0)" }, "concurrency": { "dynamicConcurrencyEnabled": true, "snapshotPersistenceEnabled": true } }Key Configuration Sections:

1. Global Settings:

version: Schema version ("2.0"for v2.x+)functionTimeout: Maximum execution time- Consumption:

00:05:00(default), max00:10:00 - Premium/Dedicated:

00:30:00(default),unlimited(max)

2. Logging Configuration:

logLevel: Minimum log levels per categoryapplicationInsights: Application Insights settings and sampling

3. Extension Configuration:

http: HTTP trigger settings (routing, concurrency)queues: Storage Queue trigger behaviorserviceBus: Service Bus trigger settingseventHubs: Event Hubs trigger configurationdurableTask: Durable Functions orchestration settings

4. Extension Bundle:

- Manages binding extensions for non-.NET languages

- Automatic versioning and updates

5. Concurrency:

dynamicConcurrencyEnabled: Adaptive concurrency control- Optimizes throughput and resource usage

local.settings.json - Local Development Settings: Contains app settings and connection strings for local development. This file is not deployed to Azure (excluded in

.gitignore).Location:

<FunctionAppRoot>/local.settings.jsonStructure:

json { "IsEncrypted": false, "Values": { "AzureWebJobsStorage": "UseDevelopmentStorage=true", "FUNCTIONS_WORKER_RUNTIME": "node", "AzureWebJobsFeatureFlags": "EnableWorkerIndexing", "MyCustomSetting": "CustomValue", "CosmosDbConnectionString": "AccountEndpoint=https://...;AccountKey=...;", "ServiceBusConnection": "Endpoint=sb://...;SharedAccessKeyName=...;SharedAccessKey=...", "APPINSIGHTS_INSTRUMENTATIONKEY": "your-instrumentation-key" }, "Host": { "LocalHttpPort": 7071, "CORS": "*", "CORSCredentials": false }, "ConnectionStrings": { "SqlConnectionString": "Server=...;Database=...;User Id=...;Password=...;" } }Key Sections:

1. Values (App Settings):

Setting Description Example AzureWebJobsStorageStorage account for function runtime UseDevelopmentStorage=true(local)FUNCTIONS_WORKER_RUNTIMELanguage runtime dotnet,node,python,java,powershellAzureWebJobsFeatureFlagsEnable preview features EnableWorkerIndexingCustom settings Your application settings Any key-value pairs Connection strings Service connections Used by connectionproperty in bindings2. Host (Local Runtime Settings):

LocalHttpPort: HTTP trigger port (default: 7071)CORS: Cross-origin resource sharing settingsCORSCredentials: Allow credentials in CORS

3. ConnectionStrings:

- Alternative to storing connections in

Values - More semantic for database connections

Important Notes:

- ⚠️ Never commit

local.settings.jsonto source control (contains secrets) - For Azure deployment, configure settings in Application Settings portal

- Use Azure Key Vault references for production secrets

- Azure CLI:

func azure functionapp publish <app-name> --publish-local-settingsto sync settings

### Best Practices

- function.json:

- Use descriptive binding names

- Leverage connection string references (don’t hardcode)

- Set appropriate binding-specific settings (batch sizes, polling intervals)

- host.json:

- Set

functionTimeoutbased on your plan and workload - Configure extension settings for optimal performance

- Enable dynamic concurrency for better throughput

- Use sampling in Application Insights for high-volume apps

- local.settings.json:

- Never commit to source control

- Use Azurite or emulators for local development

- Document required settings in README

- Use environment-specific values

- Security:

- Store secrets in Azure Key Vault

- Use managed identities for Azure service connections

- Reference Key Vault:

@Microsoft.KeyVault(SecretUri=https://...)

🔄 Q. What are Durable Functions?

Durable Functions is an extension of Azure Functions that enables you to write stateful workflows in a serverless environment. While regular Azure Functions are stateless and event-driven, Durable Functions allow you to:

- Maintain state across function executions

- Orchestrate complex workflows with multiple steps

- Chain functions together in specific patterns

- Handle long-running processes that exceed the typical function timeout limits

Key Concepts:

- Orchestrator Functions: Define the workflow logic and coordinate the execution of other functions

- Activity Functions: The individual units of work that perform actual business logic

- Client Functions: Start and manage orchestration instances

Common Patterns:

- Function Chaining: Execute functions in a specific sequence

- Fan-out/Fan-in: Execute multiple functions in parallel and aggregate results

- Async HTTP APIs: Long-running operations with status polling

- Human Interaction: Workflows requiring external approval/interaction

- Monitoring: Recurring processes with flexible scheduling

Durable Functions are supported across all Azure Functions hosting plans, but with different characteristics:

Hosting Plan Durable Functions Support Notes Consumption ✅ Yes Uses Azure Storage for state > persistence Flex Consumption ✅ Yes HTTP triggers share instances with > Durable orchestrators Premium ✅ Yes Best performance with always-ready > instances Dedicated ✅ Yes Full control over compute resources Container Apps ✅ Yes Kubernetes-based orchestration with > KEDA

Configuration in host.json:

As mentioned in your document, Durable Functions are configured in the host.json file under the durableTask extension:

"durableTask": {

"hubName": "MyTaskHub",

"storageProvider": {

"type": "azure_storage",

"connectionStringName": "AzureWebJobsStorage"

},

"maxConcurrentActivityFunctions": 10,

"maxConcurrentOrchestratorFunctions": 5,

"extendedSessionsEnabled": true,

"extendedSessionIdleTimeoutInSeconds": 300

}Key Settings:

hubName: Task hub name for organizing orchestrationsstorageProvider: Backend storage for state (Azure Storage, MSSQL, or Netherite)maxConcurrentActivityFunctions: Max parallel activity executionsmaxConcurrentOrchestratorFunctions: Max parallel orchestrator executionsextendedSessionsEnabled: Performance optimization for replay-heavy scenariosextendedSessionIdleTimeoutInSeconds: Keep orchestrator in memory for fast replay

⚠️ Q. What are Limitations of Azure Functions ?

Azure Functions has various limitations that vary by hosting plan. Key constraints include:

Critical Limitations:

- Execution timeouts: 10 min max (Consumption), unlimited (Premium/Dedicated/Flex)

- Scaling limits: 200 instances (Consumption), 1,000 (Flex), 100 (Premium)

- Cold starts: 1-10+ seconds (Consumption), eliminated (Premium/Dedicated)

- Memory/CPU: Fixed on Consumption (~1.5 GB), customizable on other plans

- Networking: No VNet on Consumption, full VNet support on Premium/Dedicated/Flex

- Storage: 1 GB deployment limit (Consumption), 100 GB (Premium/Dedicated)

Plan-Specific Constraints:

- Consumption: No VNet, no deployment slots, cold starts, 10-min timeout

- Flex Consumption: Linux only, no deployment slots, limited regions

- Container Apps: No deployment slots, cold starts when scaling to zero

Performance Considerations:

- Latency: Cold starts impact first request, warm instances <10ms overhead

- Throughput: Varies by plan (200-1,000+ instances) and trigger type

- Scalability: Event-driven (Consumption/Flex/Premium) vs. metric-based (Dedicated)

📖 For comprehensive details on all limitations, mitigation strategies, and plan comparisons, see Azure Functions Limitations

📝 ADDITIONAL INFORMATION:

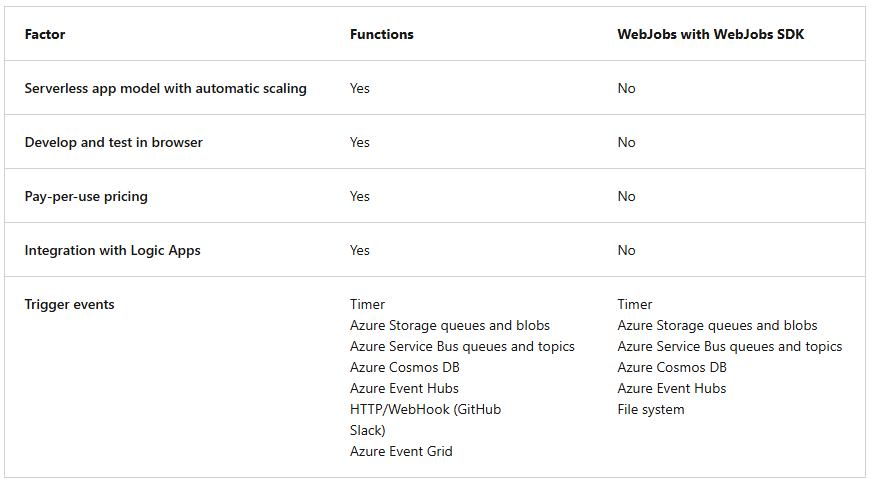

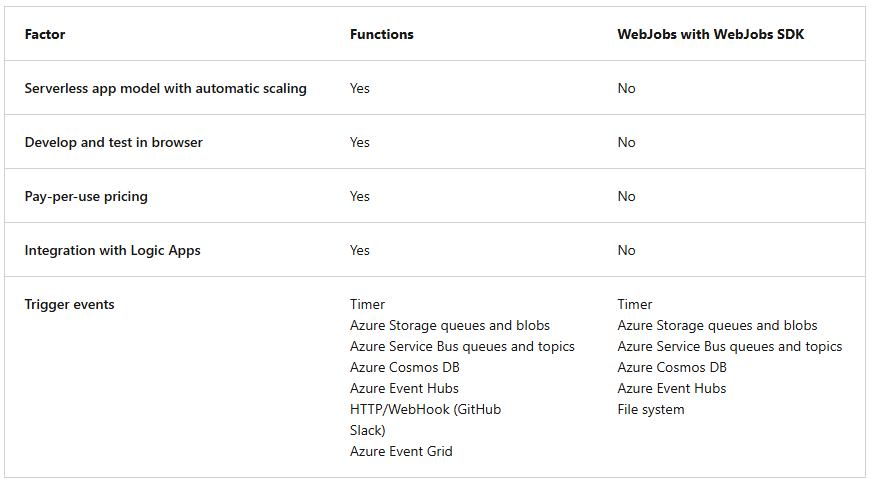

⚙️ Q. Compare Azure Functions to WebJobs ?

📚 References

Azure Functions Official Documentation

- Azure Functions Overview - Introduction to Azure Functions and serverless computing on Azure

- Azure Functions Hosting Plans - Comprehensive guide to all hosting plans and their characteristics

- Azure Functions Consumption Plan - Detailed documentation on the Consumption plan

- Azure Functions Flex Consumption Plan - Documentation for the new Flex Consumption plan

- Azure Functions Premium Plan - Premium plan features and configuration

Triggers and Bindings

- Azure Functions Triggers and Bindings - Comprehensive guide to all available triggers and bindings

- Timer Trigger - CRON expressions and schedule-based execution

- Azure Storage Queue Trigger - Queue-based message processing

- Azure Blob Storage Trigger - Blob storage event handling

- Service Bus Trigger - Enterprise messaging with Service Bus

- Cosmos DB Trigger - Change feed processing with Cosmos DB

- Event Hubs Trigger - High-throughput event streaming

- HTTP Trigger - HTTP endpoints and webhooks

- Event Grid Trigger - Event-driven architectures with Event Grid

Scaling and Performance

- Azure Functions Scale and Hosting - How scaling works across different plans

- Event-Driven Scaling in Azure Functions - Deep dive into event-driven scaling mechanisms

- Azure Monitor Autoscale - Rule-based autoscaling for Dedicated plans

- KEDA - Kubernetes Event-Driven Autoscaling - Open-source project used by Container Apps

Durable Functions

- Durable Functions Overview - Stateful functions and orchestration patterns

- Durable Functions Patterns - Common orchestration patterns (chaining, fan-out/fan-in, async HTTP APIs, monitoring, human interaction)

- Durable Functions Task Hubs - Configuration and management of task hubs

- host.json Reference - Configuration settings including Durable Functions options

Container Apps

- Azure Container Apps - Overview of Container Apps environment

- Deploy Azure Functions to Container Apps - Running Functions in Container Apps

Comparison and Migration

- Compare Azure Functions and WebJobs - Detailed comparison of serverless options

- Choose Between Azure Services for Message-Based Solutions - Comparing Event Grid, Event Hubs, and Service Bus

Best Practices

- Azure Functions Best Practices - Performance, reliability, and security recommendations

- Optimize Performance and Reliability - Tips for production workloads

- Azure Functions Pricing - Cost considerations for different plans